Transdisciplinary Education for Deep Learning, Creativity and Innovation

ARTICLE | July 24, 2018 | BY Rodolfo A. Fiorini

Abstract

Today American universities offer more than 1000 specialized disciplines and subdisciplines, and their European counterparts are following them. The mental world we live in is infinitely divided into categories, subjects, disciplines, topics, and more and more specialized subdivisions. Our past knowledge is organized into “silos”: good for grain, not for brain. Forcing societies to fit their knowledge into boxes with unrelated arbitrary boundaries, without understanding the deep reasons behind them, may lead to serious consequences, like those we witness in world affairs today. Reality is the temporal unfolding of events, and path dependence is a central concept to explain complex emergent phenomena. In modern times, specialization has overtaken broader fields of knowledge and multidisciplinary research. To overcome the missing path dependence problem, interdisciplinary and transdisciplinary education are really the ways society, together with scientists and scholars, must move on to. Interdisciplinary education consists, for instance, of a multidisciplinary relational reformulation of problems. On the other hand, transdisciplinary education is related to the study of achieving optimal reformulations to unlock their fundamental properties. We need to recall the dawn of transdisciplinary thinking from Jean Piaget’s words to achieve an effective deep learning system. Transdisciplinary education and transdisciplinarity propose the understanding of the present world by providing more unified knowledge to overcome the famous paradoxes of relative knowledge and perfect knowledge and to unfold hidden creativity and innovation. Three fundamental examples are presented and discussed in the paper.

1. Introduction

“Horror Vacui” (a Latin-derived term that means “fear of emptiness”) may have had an impact, consciously or unconsciously, as “dread for empty space” on Nature to primeval man overwhelmed by an excess of emptiness, forced to live in a world not yet filled up with signs, symbols, meanings and … data.

Many eons later (today), laymen can find themselves in a “Horror Pleni” (a Latin-derived term that means “fear of stuffed space”), information overload, totally unable to discriminate the difference between optimized encoding of an information-rich message and a random jumble of signs (Fiorini, 2014). Horror Pleni is an Italian neologism coined by Italian art critic, painter, and philosopher Gillo Dorfles in the 21st century (Dorfles, 2008). Horror Vacui and Horror Pleni are two fundamental concepts referring to humans’ intellectual operative range. Their deep meaning can be translated and extended to many different disciplined areas like art, literature, sociology, science, etc. As a matter of fact, our unconscious background is pervaded with ancestral, primeval, cosmic, and archetypal symbol/sign and dread in both situations. A dread that our current existential level is amplifying to higher levels of global confusion, chaos and/or abysmal isolation and deep internal silence.

The discovery of Nature as a reality prior to and in many ways escaping human purposes begins with the story of the sign. The story of the sign, in short, is of a piece within the story of philosophy itself, and begins, all unknowingly, where philosophy itself begins, though not as philosophy. Even if we do not have to explore every theme of that history, we must yet explain all those themes that pertain to the presupposition of the sign’s being and activity, in order to arrive at that being and activity with sufficient intellectual tools to make full sense of it as a theme in its own right. And those themes turn out to be nothing less or other than the very themes of ontology and epistemology forged presemiotically, as we might say, in the laboratory for discovering the consequences of ideas that we call the history of philosophy (Deely, 2001, pp. 19-20). If the discovery of the sign began, as a matter of fact, unconsciously with the discovery of Nature, then the beginning of semiotics was first the beginning of philosophy, for it is philosophy that makes the foundation of semiotics possible, even if semiotics is what philosophy must eventually become (Eco, 1976). Italian semiotician Umberto Eco argued that “Iconic Language” has a “triple articulation.” Iconic Figures, Semes (combinations of Iconic Figures), and Kinemorphs (combination of Semes), like in a classical movie (Stam, 2000). A semiotic code which has “double articulation” (as in the case of verbal language) can be analyzed into two abstract structural levels: a higher level called “the level of first articulation” and a lower level, “the level of second articulation” (Hjelmslev, 1961).

Quite often, from an individual perspective, external events seem to be an entirely random series of happenings. But looked at over a long period of time, and tracking the branching changes in the planet that follow from it, all chaos does produce a form of identifiable order. Patterns appear out of chaos. And this, in its essence, is chaos theory: finding order in chaos (Wheatley, 2008). Chaos theory falls into that category of scientific ideas that few actually understand but many have heard of, due to its expansive, epic-sounding principles and thoughts. Inherent to the theory is the idea that extremely small changes produce enormous effects, but ones that can only be described fully in retrospect. Why? The fundamental reason is more evident in social systems. In social systems any signal is actually small, weak, never strong. Weak signals are “the real foundation of the whole society” (Ansoff, 1975; Poli 2013). Accurate prediction is somewhat impossible and it is known that the occurrence of extreme events cannot be predicted from history (Taleb, 2015). The main reason is that even our more advanced instrumentation systems will be never accurate enough to capture the full essence of a real event. Nevertheless, novel entropy minimization techniques can improve our traditional knowledge capturing capability from experience in terms of many orders of magnitude, according to the brand new CICT (Computational Information Conservation Theory) (Fiorini & Laguteta, 2013; Fiorini, 2014, 2015, 2016; Wang et al., 2016).

Mankind’s best conceivable worldview is at most a representation, a partial picture of the real world, an interpretation centered on man. We inevitably see the universe from a human point of view and communicate in terms shaped by the exigencies of human life in a natural uncertain environment. What is difficult is processing the highly conditioned sensory information that comes in through the lens of an eye, through the eardrum, or through the skin. In fact, at each instant, a human being receives enormous volumes of data, and we have a finite number of brain cells to manage all the data we receive quickly enough.

According to current theories, brain researchers estimate that the human mind takes in 11 million pieces (tokens) of information per second through our five senses but is able to be consciously aware of only 40 of them (Koch et al., 2006; Wilson, 2004; Zimmermann, 1986). Hence, our neuro interfaces and our brain have to filter information to the extreme. To better clarify the computational paradigm, we can refer to the following principle: “Animals and humans use their finite brains to comprehend and adapt to an infinitely complex environment” (Freeman & Kozma, 2009). We are constantly reconstructing the world’s essential and superficial characteristics. This is the outcome of the on-going evolution of our relationships in a world full of surprises and challenges (Espejo & Reyes, 2011) related to deeper characteristics.

“Consilience” is the term coined by William Whewell in 1840 (Whewell, 1840) and later used by Edward O. Wilson (Wilson, 1998) for the integration of knowledge that involves a continuous remapping of reality. A constant shift of conceptual frames. Improving the consilience between disciplines of knowledge is a worthwhile philosophical aim. Arguably, it makes reality more coherent. Because of consilience, the strength of evidence for any particular conclusion is related to how many independent methods are supporting the conclusion, as well as how different these methods are. Those techniques with the fewest (or no) shared characteristics provide the strongest consilience and result in the strongest conclusions. This also means that confidence is usually the strongest when we consider evidence from different fields, because the techniques are usually very different.

In modern times, specialization has overtaken broader fields of knowledge and multidisciplinary research. The mental world we live in today is infinitely divided into categories, subjects, disciplines, topics, and more and more specialized subdivisions. Our past knowledge is organized into “silos”: good for grain, not for brain. Therefore, their consilience is quite poor. Nevertheless, American universities now offer more than 1000 specialized subdisciplines, and their European counterparts are following them. Forcing societies to fit their knowledge into boxes with unrelated arbitrary boundaries, without understanding the deep reasons behind them, may lead to serious consequences, like those we witness in world affairs today. Why are there so many different disciplines? In the next section we open the door to the root of this problem.

2. Fundamental Physics: Roots, Multidisciplinary and Interdisciplinary Education

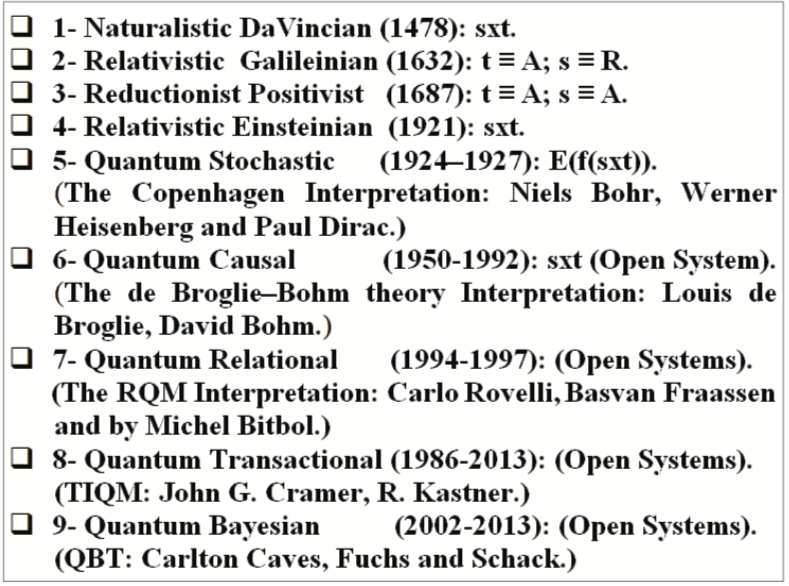

Over the centuries, the human worldview about our spacetime universe has been shifting into many evolving scientific paradigms or shared conceptual systems in an accelerating pace. A conceptual system is an integrated system of concepts that supports a coherent vision of some aspect of the world (Byers, 2015). We found more than twenty proposed and renowned worldview interpretations in the past scientific literature and grouped them into nine major conceptual disciplined areas (Figure 1).

Figure 1: More than twenty proposed and renowned worldview interpretations in scientific literature, grouped into nine major conceptual disciplined areas (see text).

We start with the da Vincian approach that considered our spacetime universe as an indissoluble unity to arrive at the Galilean approach where spacetime was split into absolute time and relative space. Then the Reductionist Positivist approach by Newton which considered absolute time as completely separated from absolute space, till the advent of the Relativistic Einsteinian approach which rejoined absolute time and absolute space into an indissoluble unity called spacetime.

In the same decade the Quantum Stochastic approach was discovered, propelled by Niels Bohr, Werner Heisenberg, Paul Dirac and the Copenhagen School in the 1920s, where a probabilistic estimation formula for spacetime functions was adopted (Schroedinger’s equation). This step of development culminated with the construction of the theory of quantum electrodynamics (QED) in the 1950s. The door to new quantum mechanics theories were now open. In the mid-1970s upon experimental confirmation of the existence of quarks, the Standard Model (STM) of elementary particle physics was developed. The STM distinguishes between fundamental and accidental symmetries. The distinction is not based on empirical features of the symmetry, nor on a metaphysical notion of necessity. A symmetry is fundamental to the extent that other aspects of nature depend on it, and it is recognized as fundamental by its being theoretically well-connected within the theoretical description of nature, that is, by its theoretical rigidity. Symmetrical properties affect world level structures and properties in an analogous way to phoneme level and syllable level properties create “double articulation” in human language (Hockett, 1958, 1960).

There is a very general point about the analysis of scientific knowledge in this. In any particular case of analysis of a symmetry (an explanation, a law), the answer to the question, “What makes this a fundamental symmetry (a genuine explanation, a law and not just an accidental regularity)?” is long and tangled, showing the pervasive dependence of other features of nature on this one. If such a long and tangled answer cannot be found, then there is no reason to think that this is a fundamental symmetry (an explanation, a law), because the entanglement is really all there is to being fundamental (an explanation, a law). This interconnectedness is part of the essential nature of science (Kosso, 2000).

Although the STM is believed to be theoretically self-consistent and has demonstrated huge and continued successes in providing experimental predictions, it does leave some phenomena unexplained and it falls short of being a complete theory of fundamental interactions. It does not incorporate the full theory of gravitation as described by general relativity, or account for the accelerating expansion of the universe (as possibly described by dark energy). The model does not contain any viable dark matter particle that possesses all of the required properties deduced from observational cosmology. It also does not incorporate neutrino oscillations (and their non-zero masses) either.

The STM model of particle physics covers the electromagnetic, the weak and the strong interaction. However, the fourth fundamental force in nature, gravitation, has defied quantization so far. Although numerous attempts have been made in the last 80 years, and in particular very recently, there is no commonly accepted solution up to the present day. One basic problem is that the mass, length and time scales quantum gravity theories are dealing with are so extremely small that it is almost impossible to test the different proposals, even with the current, most advanced instrumentation resources.

The development of the STM was driven by theoretical and experimental particle physicists alike. For theorists, the STM is a paradigm of quantum field theory (QFT), which exhibits a wide range of physics including spontaneous symmetry breaking, anomalies, non-perturbative behavior, etc. It is used as a basis for building more exotic models that incorporate hypothetical particles, extra dimensions, and elaborate symmetries (such as supersymmetry) in an attempt to explain experimental results at variance with the STM, such as the existence of dark matter and neutrino oscillations.

Since the second half of 1970s many research groups tried to promote their interpretations of the quantum world. Other approaches to resolve conceptual problems introduced new mathematical formalism, and so proposed additional theories with their interpretations. An example is the “Quantum Causal” approach or “Bohmian mechanics,” which is empirically equivalent with the standard formalisms, but requires extra equations to describe the precise trajectory through state space taken by the actual world. This extra ontological cost provides the explanatory benefit of explaining how the probabilities observed in measurements can arise somewhat naturally from a deterministic process.

Since the 1990s, there has been a resurgence of interest in “non-collapse theories.” The Stanford Encyclopedia, as of 2017 (IQM, 2017), groups interpretations of quantum mechanics into five areas: “Bohmian mechanics” (pilot-wave theories) (Goldstein, 2013), “collapse theories” (Ghirardi, 2011), “many-worlds interpretations” (Vaidman, 2015), “modal interpretation” (Lombardi & Dieks, 2014), and “relational interpretations” (Laudisa & Rovelli, 2013), as classes into which most suggestions may be grouped.

The most common interpretations of quantum mechanics are listed as a tabular comparison in Wikipedia (IQM, 2017). The attribution values shown in the cells of that table are not without controversy, for the precise meanings of some of the concepts involved are unclear and, in fact, are themselves at the center of the controversy surrounding the given interpretation in the scientific community. No empirical evidence exists that distinguishes among these interpretations. To that extent, the physical theory stands, and is consistent within itself and with observation and experiment; difficulties arise only when one attempts to “interpret” the theory.

Nevertheless, designing experiments which would test the various interpretations are and will be the subject of active research in the next few years. Most of these interpretations have variants. For example, it is difficult to get a precise definition of the original “Copenhagen interpretation” as it was developed and argued about by many people. Interpretations of quantum mechanics attempt to provide a conceptual framework for understanding the many aspects of quantum mechanics, which are not easily handled by the conceptual framework used for classical physics (Science 1.0).

At present, the most important extant versions of quantum gravity (Weinstein & Rickles, 2015) theories are canonical quantum gravity, loop theory and string theory. Canonical quantum gravity approaches leave the basic structure of QFT untouched and just extend the realm of QFT by quantizing gravity. Other approaches try to reconcile quantum theory and general relativity theory not by supplementing the reach of QFT but rather by changing QFT itself. String theory, for instance, proposes a completely new view concerning the most fundamental building blocks: It does not merely incorporate gravitation but it formulates a new theory that describes all four interactions in a unified way, in terms of strings (Kuhlmann, 2012).

It is not so difficult to see why gravitation is far more difficult to deal with than the other three forces. Electromagnetic, weak and strong forces all act in a given space-time. In contrast, gravitation is, according to GRT (General Relativity Theory), not an interaction that takes place in time, but gravitational forces are identified with the curvature of spacetime itself. This viewpoint that gravity is not a physical interaction allows what are classically regarded as gravitational forces to be consistent with the relativistic principle of causality (that no interaction can travel faster than the speed of light), and it leads to the possibility of an infinite universe, our own observable universe being limited to the part that is receding from us at “observed” speeds less than the velocity of light. Thus quantizing gravitation could amount to quantizing spacetime, and it is not at all clear what that could mean. One controversial proposal is to deprive spacetime of its fundamental status by showing how it “emerges” in some non-spatio-temporal theory. The “emergence” of spacetime then means that there are certain derived terms in the new theory that have some formal features commonly associated with spacetime.

The most recent, sound proposal for one fundamental solution to quantum gravity is under evaluation in the scientific community. According to José Vargas, the Kähler equation, which is based on Cartan’s exterior calculus and which generalizes the Dirac equation, solves the fine structure of the hydrogen atom without gamma matrices. A Kaluza-Klein (KK) space results, where geometry and general relativity meet quantum mechanics. Gravitation and quantum mechanics are thus unified ab initio, while preserving their respective identities. Weak and strong interactions, and also classical mechanics, start to emerge. Each does so, however, in its own idiosyncratic way. All physical concepts are to be viewed as emergent. Emergence of this type and reductionism are as inseparable as the two faces of an ordinary surface (Vargas, 2014).

In general, any interpretation of quantum mechanics is a conceptual or argumentative way of relating between:

- The “formalism” of quantum mechanics (mathematical objects, relations, and conceptual principles that are intended to be interpreted as representing quantum physical objects and processes of interest), and

- The “phenomenology” of quantum physics (observations made in empirical investigations of those quantum physical objects and processes, and the physical meaning of the phenomena, in terms of ordinary understanding).

Two qualities vary among interpretations, according to hermeneutics:

- Ontology (it claims about what things, such as categories and entities, “exist” (reality) in the world, and what theoretical objects are related to those real existents);

- Epistemology (it claims about the possibility, scope, and means toward relevant “knowledge” of the world).

In the Philosophy of Science (Epistemology), the distinction of knowledge versus reality is termed “epistemic” versus “ontic.” A general law is a regularity of outcomes (epistemic), whereas a causal mechanism may regulate the outcomes (ontic). A phenomenon can receive interpretation either in an ontic or epistemic manner. For instance, indeterminism may be attributed to limitations of human observation and perception (epistemic), or may be explained as a real existent maybe encoded in the universe (ontic). Confusing the epistemic with the ontic, like if one were to presume that a general law actually “governs” outcomes, and that the statement of a regularity has the role of a causal mechanism, is a category mistake.

In a broad sense, scientific theory can be viewed as offering scientific realism, an approximately true description or explanation of the natural world, or might be perceived with antirealism. A realist stance seeks the epistemic and the ontic, whereas an antirealist stance seeks epistemic but not the ontic. In the 20th century’s first half, antirealism was mainly logical positivism, which sought to exclude unobservable aspects of reality from scientific theory. Since the 1950s, antirealism has become more modest, which concerns mostly instrumentalism, permitting talk of unobservable aspects, but ultimately discarding the very question of realism and posing scientific theory as a tool to help humans make predictions, not to attain metaphysical understanding of the world. The instrumentalist view can best be explained by the famous quote of David Mermin, “Shut up and calculate”, often misattributed to Richard Feynman.

Our current worldview supports a universe that progressively expands in time and generates unprecedented levels of complexity, evolving as an emergent process which is creative and not as a replay of pre-established models, not as the projection onto time of a timeless set of Platonic models and mathematical ideas. American theoretical physicist John A. Wheeler, a major theorist of the “participatory anthropic principle” (PAP) (PAP, 2017), formulates a similar principle from the point of view of quantum physics:

“we used to think that the electron in the atom had a position and a velocity regardless of whether we measure it or not….”, but the observer’s position has to be taken into account, and here quantum theory ties in with George Berkeley’s metaphysics of observation.

Therefore, for a multidisciplinary education, we can start from our nine major conceptual disciplined areas (Figure 1) and group them further into three fundamental reference paradigm areas: the Positivist (Newtonian), the Relativist (Einsteinian) and the Constructivist (Quantum) conceptual framework (CF). These three fundamental CFs or reference paradigm areas constitute just the minimal elementary knowledge to build a strong and reliable multidisciplinary competence, from which an intercultural competence can be grown. But we must beware of the “paradigmatic confusion” mistake still.

Paradigmatic or shared conceptual system confusion occurs when incompatible epistemological assumptions are inadvertently mixed with explanations and practice. This last phenomenon is particularly troublesome for interdisciplinary and intercultural relations, because a disciplinary field relies on “theory into practice” as its criterion for conceptual relevance. If the paradigm underlying a practice is different from the explanation attached to the practice, both the credibility of the concept and the effectiveness of the method suffer.

According to Wilson, the ultimate goal of our epistemological activities is a single complete theory of everything, and the foundation of this theory must be the sciences. Although each discipline seeks knowledge according to its own methods, Wilson asserts that “the only way either to establish or refute consilience is by methods developed in the natural sciences.” The end of this process would realize a dream that goes back to Thales of Miletus, the sixth-century B.C. thinker whom Wilson credits with founding the physical sciences.

Modern philosophy was initiated through a rupture from earlier thought, e.g., Bacon’s smashing of the idols, Locke’s imagining the mind as a blank tablet, and Descartes’ systematic doubt. This created an empty space reserved exclusively for clear and distinct ideas joined with the rigorously deductive process of “objective” thinking essential to science. More recently questions have been raised regarding, not the fruitfulness, but the adequacy of this mode of thinking. Great efforts are now being taken to broaden this field of knowledge to include human subjectivity and other modes of awareness as deep thinking, meditative thinking, creative imagination and phenomenological investigation. The contrast between the uncertainty of the future and the fixity of the past is, despite some postmodernist strictures, a basic presupposition in our everyday experience of time: it provides, actually, much of the ground for an ontological distinction between the future and the past. Hence, philosophy is expanding to include hermeneutic recognition, interpretation and relation between the multiple values, cultures and civilizations of the many peoples of the world and their varied modes of understanding. Philosophers now are challenged to unveil at a deeper level the cumulative freedom by which we shape ourselves with the subjective terms of values and virtues, which in turn constitute cultures and their traditions. These constitute the hermeneutic vantage points or horizons in terms of which we understand, interpret and respond in the many dimensions of our life.

There are many obstacles to Wilson’s program of unifying knowledge. The greatest involves finding a place for the normative in the theory of everything. Much of what goes on in moral philosophy, literature, the arts, and the human sciences-disciplines that Wilson would like to dispense with in their present form is directed toward telling us how to live rather than how the world is. As long as we remain creatures who act as well as think and who moralize as well as conceptualize, it is hard to see how our system of knowledge can be unified in the way that Wilson wishes.

At a more practical level, we, the children of the Anthropocene Era, are entering the 4th industrial revolution and the impact is going to be pervasive and of greater magnitude compared to the previous industrial revolutions. The incoming changes, which are approaching at an accelerating speed, will be impacting everything and everybody and blurring the lines between the physical, digital, and biological spheres; they will affect the bio-psycho-social dimensions, our narratives and even what it means to be human. Narrative is a major cognitive instrument to deal both with the irrevocability of the past and with the contingency of future events, dealing at its core with a retrospective perspective on events which used to be future or contingent, but have since become past and irrevocable. Narrative is, more specifically, a way to handle the human time of action, experience, and social relationships. Therefore, narrative is an emergent phenomenon which turns back on the evolutionary nature of cosmic reality itself. If we are not farsighted and do not plan effectively, the results could be very problematic for all life forms on Earth. If we manage the 4th industrial revolution with the same blindness and forms of denial with which we managed the previous industrial revolutions, the negative effects will be exponential (Zucconi, 2016). At social level, inequality and unemployment destroy opportunity freedom. Radical inequality significantly undermines opportunity freedoms and capacity freedoms and consequently radically undermines human capital as a foundation of community prosperity (Nagan, 2016).

As the global age brings new possibilities and challenges, we need to think in much broader terms than ever before. Where in the past ethics narrative could be grounded in relatively restricted calculi of good and evil, according to the specific character of the persons, substances or natures involved, now we find that small actions have global effects and that these are filtered through a massive array of cultures. Narrative models the limited openness of the past through selectivity and perspectivism, and therefore stands at the no-man’s land between the irrevocability of the past and the emergence of “new pasts.” Time, understood as the conscious time experienced by brains, and not merely as the unconscious time of sentient life, has complexified reality into a new dimension: the virtual reality of experience mediated by memory, by anticipations, by expectations. It is time made conscious through representations. Therefore, brains can be conceived as a virtual reality machine, a narrative machine, and a harmonization machine. The brain manages an organism’s response to stimuli, including decisions resulting from the sum and the relative weight of stimuli. A more elaborate brain anticipates possible responses, creates scenarios and models possible realities depending on the outcome of choices. It also requires, however, a critical perspective on the potential fallacies which accompany narrative explanations, notably hindsight bias. This is the main reason why we need reliable and effective training tools to achieve full narrative and predicative proficiency, such as the EPM (De Giacomo, 1993) and EEPM (Fiorini, 2017a).

Hence, to ethics there needs to be an added aesthetic narrative dimension, so that human beings and societies are truly mobilized to bring together their distinctive gifts in order to work toward a global world marked by equity and balance, harmony and peace. Human culture can intensify this virtualization of the environment, and human beings can inhabit the ultimate virtual reality game, a multidimensional space of cultural representations and semiotic objects which includes, finally and literally, actual narratives and virtual reality devices. Reality as we face it is a vast web of related phenomena, each of which appears to be supervenient, or “just-so”, the result of a contingent facticity inherent to the complex structure of the universe itself.

Interdisciplinary and transdisciplinary education are really the ways society, together with scientists and scholars, must move on to. Interdisciplinary education consists, for instance, of a multidisciplinary relational reformulation of problems, like from theoretical to practical representations, from mechanical to electromagnetic, from chemical to biological, from clinical research to healthcare, etc. On the other hand, transdisciplinary education is related to the study of achieving their optimal reformulations and their interrelated fundamental properties. We need to recall the dawn of transdisciplinary thinking by Jean Piaget’s words (Piaget, 1972a, p.138):

“…higher stage succeeding interdisciplinary relationships... which would not only cover interactions or reciprocities between specialised research projects, but would place these relationships within a total system without any firm boundaries between disciplines.”

3. Transdisciplinary Education

In the past century, in their questioning of the foundations of science, Edmund Husserl (Husserl, 1966) and other scholars discovered the existence of different levels of perception of Reality by the subject-observer. But these thinkers, pioneers in the exploration of a multi-dimensional and multi-referential reality, have been marginalized by academic philosophers and misunderstood by a majority of physicists, who are cocooned in their respective specializations.

Werner Heisenberg came very near, in his philosophical writings, to the concept of “level of Reality”. In his famous manuscript of the year 1942 (published only in 1984) (Heisenberg, 1984), Heisenberg, who knew well Husserl, introduces the idea of three regions of reality, and was able to give access to the conception of “reality” itself. The first region is that of classical physics, the second that of quantum physics, biology and psychic phenomena and the third that of the religious, philosophical and artistic experiences. This classification has a subtle ground: the closer and closer connectedness between the Subject and the Object.

As a matter of fact, ontology, in its philosophical meaning, is the discipline investigating the structure of reality. Its findings can be relevant to knowledge organization, and models of knowledge can, in turn, offer relevant ontological suggestions. Several philosophers in time have pointed out that reality is structured into a series of integrative levels, like the physical, the biological, the mental, and the cultural, and that each level acts as a base for the emergence of more complex levels. More detailed theories of levels have been developed by Nicolai Hartmann (Hartmann, 1942) and James K. Feibleman (Feibleman, 1954), and these have been considered as a source for structuring principles even in bibliographic classification by both the Classification Research Group (CRG, 1969) and German information scientist and philosopher Ingetraut Dahlberg’s (Dahlberg, 1978) ICC (Information Coding Classification) (ICC, 2017).

CRG’s analysis of levels and of their possible application to a new general classification scheme based on phenomena instead of disciplines, as it was formulated by Derek Austin in 1969 (Austin, 1969), has been examined in detail by Gnoli & Poli (2004). The analyses by Feibleman and Austin refer to a level either as the whole or as a part. However, it is worth mentioning that the theory of levels has been studied by most of the scholars, who have elaborated its details, as a way to improve both the (traditional) theory of being and the theory of wholes. The interpretation of “level” as either the whole or a part runs into serious troubles as soon as psychological and social factors are taken into account. On the other hand, Dahlberg highlights the search for objective criteria of classification of the content of documents, making them more adherent to knowledge as they are developed by science: e.g., the structure of a classification should be based on levels, because reality itself has a levelled structure. ICC’s conceptualization goes beyond the scope of the well-known library classification systems, such as Dewey Decimal Classification (DCC), Universal Decimal Classification (UDC), and Library of Congress Classification (LCC), by extending also to knowledge systems that so far have not tried to classify literature. ICC actually presents a flexible universal ordering system for both literature and other kinds of information, set out as knowledge fields (Dahlberg, 2012).

Furthermore, it is important not to confuse ontological problems with those involved in their formal translation. In other words, care must be taken to distinguish between the ontological tree and the logical tree that should be its rigorous translation. Logic can be distinguished from formal ontology, but only in the sense of logic as uninterpreted calculus. The method for constructing abstract formal systems is subject to varying interpretations over varying domains. Above all, we must consider the fact that the definitive differentiation of the fundamental forms of words (noun and verb) in the Greek form of “onoma” and “rhema” was worked out and first established in the most immediate and intimate connection with the conception and interpretation of Being that has been definitive for the entire Western world. This inner bond between these two happenings is accessible to us unimpaired and is explored out in full clarity in Plato’s Sophist.

In fact, the levels of Reality are radically different from the levels of the representation of system organization as these have been defined in recent systemic approaches (Nicolescu, 1992). Levels of organization do not presuppose a break with fundamental concepts: several levels of organization appear at one and the same level of Reality. The levels of organization correspond to different structuring of the same fundamental laws. For example, Marxist economy and classical physics belong to one and the same level of Reality.

Since the definitive formulation of quantum mechanics around 1930, founders of the new science have been acutely aware of the problem of formulating a new “quantum logic.” Subsequent to the work of George Birkhoff and John von Neumann, a veritable flourishing of quantum logic was not far from its arrival (Brody, 1984). The aim of this new logic was to resolve the paradoxes which quantum mechanics had created and to provide a conceptual framework for understanding the many aspects of quantum mechanics which are not easily handled by the conceptual framework used for classical physics. All that in the attempt, to the extent possible, to arrive at a predictive power stronger than that afforded by classical logic.

History will credit Stéphane Lupasco for having shown that the logic of the “included middle” is a true logic, formalizable and formalized, multivalent (with three values: A, ¬A (non-A), and T) and non-contradictory (Lupasco, 1987). His philosophy, which takes quantum physics as its point of departure, has been marginalized by physicists and philosophers. Curiously, on the other hand, it has had a powerful albeit underground influence among psychologists, sociologists, artists, and historians of religions.

Perhaps the absence of the notion of “levels of Reality” in his philosophy obscured its substance: many persons wrongly believed that Lupasco’s logic violated the principle of non-contradiction. Our understanding of the axiom of the included middle (i.e. there exists a third term T which is at the same time A and ¬A) is completely clarified once the notion of “levels of Reality” is introduced.

In order to obtain a clear image of the meaning of the included middle, we can represent the three terms of the new logic (A, ¬A, and T) and the dynamics associated with them by a triangle in which one of the vertices is situated at a finer level of Reality and the two other vertices at another level of Reality. If one remains at a single level of Reality, all manifestation appears as a struggle between two contradictory elements (e.g. wave A and corpuscle ¬A). The third dynamic, that of the T-state, is exercised at another level of Reality, where that which appears to be disunited (wave or corpuscle) is in fact united (quantum), and that which appears contradictory is perceived as non-contradictory. It is the projection of T on one and the same level of Reality which produces the appearance of mutually exclusive, antagonistic pairs (A and ¬A). A single level of Reality can only create antagonistic oppositions. It is inherently self-destructive if it is completely separated from all the other levels of Reality. A third term, let us call it T0, which is situated on the same level of Reality as that of the opposites A and ¬A, cannot accomplish their reconciliation.

The T-term is the key in understanding indeterminacy: being situated on a different level of Reality than A and ¬A. It necessarily induces an influence of its own level of Reality upon its neighboring and different level of Reality: the laws of a given level are not self-sufficient to describe the phenomena occurring at the respective level.

The entire difference between a triad of the included middle and a Hegelian triad is clarified by consideration of the role of time. In a triad of the included middle the three terms coexist at the same moment in time. On the contrary, each of the three terms of the Hegelian triad succeeds the former in time. This is why the Hegelian triad is incapable of accomplishing the reconciliation of opposites, whereas the triad of the included middle is capable of it. In the logic of the included middle the opposites are rather contradictories: the tension between contradictories builds a unity which includes and goes beyond the sum of the two terms. The Hegelian triad would never explain the nature of indeterminacy.

One also sees the great dangers of misunderstanding engendered by the common enough confusion created between the axiom of the excluded middle and the axiom of non-contradiction. The logic of the included middle is non-contradictory in the sense that the axiom of non-contradiction is thoroughly respected, a condition which enlarges the notions of “true” and “false” in such a way that the rules of logical implication no longer concern two terms (A and ¬A) but three terms (A, ¬A and T), co-existing at the same moment in time. This is a formal logic, just as any other formal logic: its rules are derived by means of a relatively simple mathematical formalism. The logic of the included middle does not abolish the logic of the excluded middle: it only constrains its sphere of validity.

The logic of the excluded middle is certainly valid for relatively simple situations. On the contrary, the logic of the excluded middle is harmful in complex, transdisciplinary cases. The term “transdisciplinary,” as carrying a different meaning from “interdisciplinary,” was first introduced in 1970 by Jean Piaget (Piaget, 1972b). From etymological point of view “trans” means “between, across, beyond.” Basarab Nicolescu means by the term “transdisciplinary” that “which deals with what is at the same time between disciplines, through disciplines and beyond any discipline” (Nicolescu, 1996). Therefore, “transdisciplinarity” is clearly not a new discipline but a new human worldview. See also the Internet site of the International Center for Transdisciplinary Research (CIRET) (CIRET, 2015). In this way, one can see why the logic of the included middle is not simply a metaphor, like some kind of arbitrary ornament for classical logic, which would permit adventurous incursions into the domain of complexity. The logic of the included middle is the privileged logic of complexity, privileged in the sense that it allows us to cross and to navigate the different areas of knowledge in a coherent way, by enabling a new kind of simplicity.

Applied to education, transdisciplinarity is an essential step in the formation and becoming of man in the facilitation of his access and participation in the socio-cultural and spiritual life. The current living generation is experiencing a transition from history to hyperhistory. Advanced information societies are more and more heavily dependent on ICTs (Information and Communication Technologies) for their normal functioning and growth. Processing power will increase, while becoming cheaper and cheaper. The amount of data will reach unthinkable quantities. And the value of our networked resources will grow almost vertically. However, our storage capacity (space) and the speed of our communications (time) are still lagging behind. Hyperhistory is a new era in human development, but it does not transcend the spacetemporal constraints that have always regulated our life on this planet. The question to be addressed next is: “given all the variables we know, what sort of hyperhistorical environment are we building for ourselves and for future generations?” The short answer is: the “Infosphere.” For the long answer, see Floridi (2014). The acquisition of information specific to a domain of knowledge needs to be made with the idea of maintaining the completeness of knowledge through the interaction with other fields of knowledge. These relationships and interrelationships actually express the unity of the studied phenomenon as part of reality.

Transdisciplinary education and transdisciplinarity propose an understanding of the present world by providing more unified knowledge to overcome the famous paradox of relative knowledge, according to which the sphere of knowledge increases in arithmetic progression while the area of ignorance increases in geometric progression, thus solving a problem at the cost of acquisition of two, etc. The solution that we propose is another paradox, much less known, that of perfect knowledge, according to which only when we understand the reasons why we cannot achieve real knowledge, it becomes accessible to us. In other words, understanding why we cannot understand, we begin to understand! It is the same apparent paradox we have in current computer computation as underlined by CICT, where computation can use either an “approximated approximation” or “exact approximation” representation system. To achieve an exact approximation computational number representation, logic must be described in terms of “self-closure spaces” to avoid the contemporary IDB (Information Double Bind) problem (Fiorini, 2014, 2017b). Reality is the temporal unfolding of events, and path dependence is a central concept to explain complex emergent phenomena.

4. Working Examples

Looking back over decades and centuries of scientific research, many transdisciplinary thinking examples can be figured out even when the transdisciplinary concept itself did not have this name. We would like to present here an initial list of the most outstanding and promising ones. Usually these efforts are conceived at the conceptual level by a leading researcher and then followed by others over an interval of time that may span decades or even centuries to get its first experimental verification. Chronologically, we start with “primary water” in 1896, then the “equivalence principle” in general relativity theory (GRT) in 1915, the “wave-particle duality” (WPD) of quantum mechanics (QM) in 1924, quantum gravity (QG) in 2008, the “continuum-discrete duality” in computational information conservation theory (CICT) in 2013, etc. The present article focuses on the first three examples of this list only and the others are not considered here. Due to their importance, they will be addressed in a differed paper.

4.1. Example #1: Primary Water

The first example of transdisciplinary thinking comes from a group of researchers starting from Finnish and Swedish baron, geologist, mineralogist Nils Adolf Erik Nordenskiöld. In 1896, Nordenskjold, a Stockholm professor of mineralogy and Arctic explorer, published an essay, “About Drilling for Water in Primary Rocks” (Nordenskiöld, 1896), which was to win him a nomination for the Nobel Prize in physics, though he died before the prize was actually awarded. He was inspired by his father, the Chief of Mining in Finland, who told him that iron mines along the Finnish coast were never penetrated by sea water, but always had fresh water present. During the last part of the century, Nordenskjold was finding water by drilling into promontories and rocky islands off the Swedish coast. He concluded that water was formed deep within the Earth and could be found in hard rock. He called this water “primary,” due to its association with so-called “primary rocks,” which geologists term magmatic, or those, such as granites, basalts, and rhyolites, which derive from the molten magma deep within the Earth and later cool to crystallize into igneous rocks. He also affirmed that one could sink wells capable of producing such “primary water” year-round along the northern and southern coast of the Mediterranean Sea and in the whole of Asia Minor, precisely the best known part of the world afflicted with aridity.

The eminent mining geologist, Josiah Edward Spurr, in his two-volume treatise published in 1923, called attention to the fact that the existence of water as an essential component of igneous magmas had long been recognized. The existence was clearly shown by the vast clouds of water droplets that condense from the emitted vapor during volcanic eruptions. The fundamental idea that there is a thermodynamic cycle within the Earth that both produces and is fueled by water was still a concern at least up to 1942, when Oscar Meinzer, formerly head of the Groundwater Division of the U.S. Geological Survey in his book Hydrology (published in 1942), exposed the view that waters of internal origin are tangible additions to the Earth’s water supply. Fifteen years before the publication of his book, Meinzer in a long essay referred to huge springs in the United States that yield 5,000 gallons or more per minute. This phenomenon is not confined to the United States. One incredibly productive water source flowing out of limestone is the Ain Figeh spring that alone supplies water for over one million residents of Damascus, Syria, and is also the principal source of the Barada River.

In the 1930s, Bavarian-born mining engineer and geologist Stephan Riess, while working in a deep mine at high elevation, after a load of dynamite had been set off in the bottom of it, was amazed to see water gushing out in such quantities that pumps installed to remove it at the rate of 25,000 gallons per minute could not make a dent in the flow. Staring forth into the valley below, Riess asked himself how water that supposedly had trickled into the Earth as rain could rise through hard rock into the shafts and tunnels of a mine nearly at the top of a mountain range. The temperature and purity of the water suggested to Riess it must have a completely different origin than ordinary groundwater. Since none of the textbooks he had studied had referred to what seemed to confront him as an entirely anomalous phenomenon, he decided to look into it further.

Research undertaken by Riess in 1934 showed enormous quantities of virgin water could be obtained from crystalline rocks. This involved a combination of geothermal heat and a process known as triboluminescence, a glow which electrons in the rocks discharge as a result of friction or violent pressure, that can actually release oxygen and hydrogen gases in certain ore-bearing rocks. This process, called “cold oxidation,” can form virgin or primary water. Riess was able to tap straight into formations of hard desert rock of the right composition and produce as much as 8,000 liters per minute. Oxygen and hydrogen combine under the electromechanical forces of the earth to form liquid water. In 1957, after Riess had been working on the problem for nearly two decades, Encyclopedia Britannica’s Book of the Year ran the following statement: “Stephan Riess of California formulated a theory that ‘new water’ which never existed before, is constantly being formed within the earth by the combination of elemental hydrogen and oxygen and that this water finds its way to the surface, and can be located and tapped, to constitute a steady and unfailing new supply.”

In 1960, Michael Salzman, then a professor at the University of California’s School of Commerce, who had served as an engineer with the U.S. Navy’s Hydrographic Office, summed up his research work of over thirty years in the book titled “New Water for a Thirsty World” underlining the limitations of modern hydrology science, concerned exclusively with the hydrologic cycle in all of its various aspects (Salzman, 1960). Modern hydrology, as a science, is considered to have begun with the work of Pierre Perrault (1608-1680) and of Edme Mariotte (1620-1684). These men had, for the first time, put hydrology on a quantitative basis. The science of hydrology has progressed from these early beginnings, but it must be realized that it deals exclusively with surface water run-off and ground water run-off through pervious granular materials and that these ignore consolidated rock fissure waters for all practical purposes and the chemistry of the earth, the chemical reactions which produce the waters of internal origin. Salzman put into evidence our ignorance about geochemistry vs. hydrology, and mental blocks. They exist because thinking processes follow along certain patterns that have been molded and shaped by training, and by the social, psychological, and economic environment of the individual. In essence, mental blocks are the result of self-imposed restrictions, which are unknowingly interposed and which sometimes prevent the solving of problems.

Within the thin crust of the earth are temperatures that vaporize iron and pressures that keep molten rock solid. Absorbed in everyday life problems, man is nonetheless periodically reminded of the deep mysteries beneath his feet when the elemental forces of pressure and high temperature breach a fracture in the earth’s crust (Coghlan, 2017). Salzman gave ample evidence of dynamic earth, to show the existence of magmatic, metamorphic, and volcanic water. Indeed, the origin of the earth’s water supply is thought to be derived from the interior of the earth. Riess drilled 753 documented primary water wells on four continents successfully. Today such projects are underway in Nigeria, Madagascar and many more (PWT, 2017).

Salzman’s transdisciplinary vision aggregated the relational knowledge on geochemistry, petrology, mineralogy, crystallography, physical chemistry, as well as structural geology to offer his new worldview of a dynamic earth crust. He concludes his book remembering that with proper management, this earth can abundantly supply its inhabitants with all they require. But this is not the route we travel; to change our course requires meaningful education and understanding. “History is,” as H. G. Wells describes it, “a race between education and catastrophe.” What is your choice?

4.2. Example #2: General Relativity Theory (GRT)

The second example of transdisciplinary thinking comes from the German-born theoretical physicist Albert Einstein with the enunciation of his “equivalence principle” (EP) for General Relativity Theory (GRT). In GRT, the EP is any of several related concepts dealing with the equivalence of gravitational and inertial mass, and to Albert Einstein’s observation that the gravitational “force” as experienced locally while standing on a massive body (such as the Earth) is actually the same as the pseudo-force experienced by an observer in a non-inertial (accelerated) frame of reference. GRT is a theory of gravitation that was developed by Albert Einstein between 1907 and 1915, with contributions by many others after 1915. According to GRT, the observed gravitational attraction between masses results from the warping of space and time by those masses. To the interested reader, a brief historical development of this theory is referred to as HGRT (2017).

Einstein’s ground-breaking realization (which he called “the happiest thought of my life”) was that gravity is in reality not a force at all, but is indistinguishable from, and in fact the same thing as, acceleration, an idea he called the “principle of equivalence.” He realized that if he were to fall freely in a gravitational field (a skydiver before opening his parachute, or a person in an elevator when its cable breaks), he would be unable to feel his own weight, a rather remarkable insight in 1907, many years before the idea of freefall of astronauts in space became commonplace.

So, gravity, Einstein realized, is not really a force at all, but just the result of our surroundings accelerating relative to us. Or, if there is perhaps a better way of looking at it, gravity is a kind of inertial force, in the same way as the so-called centrifugal force is not a force in itself, merely the effect of a body’s inertia when forced into a circular path. In order to rationalize this situation, though, Einstein was to turn our whole conception of space. In fact, according to Einstein’s worldview, matter does not simply pull on other matter across empty space, as Newton had imagined. Rather matter distorts spacetime and it is this distorted spacetime that in turn affects other matter. Objects (including planets, like the Earth, for instance) fly freely under their own inertia through warped spacetime, following curved paths because this is the shortest possible path (or geodesic) in warped spacetime.

In theoretical physics, a mass generation mechanism is a theory which attempts to explain the origin of mass from the most fundamental laws of physics. To date, a number of different models have been proposed which advocate different views of the origin of mass. The problem is complicated by the fact that the notion of mass is strongly related to the gravitational interaction but a theory of the latter has not yet been reconciled with the currently popular model of particle physics, known as the Standard Model mentioned in the previous section (yes, we know about the Higgs Boson mechanism, but its reality is substantiated by only one experimental laboratory evidence in the world. No other lab elsewhere is able to repeat that experimentation currently). On the other hand, inertial mass is the mass of an object measured by its resistance to acceleration.

Einstein’s transdisciplinary vision aggregated the relational knowledge on energy, gravitational mass, inertial mass, mechanics, dynamics and geometry offering a unique new worldview. That is the reason for the well-known term “geometrodynamics” which is as a synonym for “general relativity.” More properly, some authors use the phrase “Einstein’s geometrodynamics” to denote the initial value formulation of general relativity, introduced by American physicists Richard L. Arnowitt, Stanley Deser, and Charles W. Misner (ADM formalism) around 1960. In this reformulation, spacetimes are sliced up into spatial hyperslices in a rather arbitrary fashion, and the vacuum Einstein field equation is reformulated as an evolution equation describing how, given the geometry of an initial hyperslice (the “initial value”), the geometry evolves over “time.” This requires giving constraint equations which must be satisfied by the original hyperslice. It also involves some “choice of gauge”; specifically, choices about how the coordinate system is used to describe how the hyperslice geometry evolves.

During the 1950s American theoretical physicist John A. Wheeler developed a program of physical and ontological reduction of every physical phenomenon to the geometrical properties of a curved space-time in an even more fundamental way than the ADM reformulation of general relativity, with a dynamic geometry whose curvature changes with time, called “Geometrodynamics” (Wheeler, 1962). He wanted to lay the foundation for “quantum gravity” and unify gravitation with electromagnetism (the strong and weak interactions were not yet sufficiently well understood in 1960). Wheeler introduced the notion of “geons,” gravitational wave packets confined to a compact region of spacetime and held together by the gravitational attraction of the (gravitational) field energy of the wave itself. Wheeler was intrigued by the possibility that geons could affect test particles much like a massive object, hence mass without mass.

In the ADM reformulation of general relativity, Wheeler argued that the full Einstein field equation can be recovered once the momentum constraint can be derived, and suggested that this might follow from geometrical considerations alone, making general relativity something like a logical necessity. Specifically, curvature (the gravitational field) might arise as a kind of “averaging” over very complicated topological phenomena at very small scales, the so-called “spacetime foam.” This would realize geometrical intuition suggested by quantum gravity, or field without field.

More recently, theoretical physicist Christopher Isham, philosopher Jeremy Butterfield and their students have continued to develop quantum geometrodynamics to take account of recent work toward a quantum theory of gravity and further developments in the very extensive mathematical theory of initial value formulations of general relativity. Some of Wheeler’s original goals remain important for this work, particularly the hope of laying a solid foundation for quantum gravity. The philosophical program also continues to motivate several prominent contributors. Topological ideas in the realm of gravity date back to Riemann, Clifford and Weyl who found a more concrete realization in the wormholes of Wheeler characterized by the Euler-Poincaré invariant.

GRT has been proven remarkably accurate and robust in many different tests over the last century. The slightly elliptical orbit of planets is also explained by the theory but, even more remarkably, it also explains with great accuracy the fact that the elliptical orbits of planets are not exact repetitions but actually shift slightly with each revolution, tracing out a kind of rosette-like pattern. For instance, it correctly predicts the so-called precession of the perihelion of Mercury (that the planet Mercury traces out a complete rosette only once every 3 million years), something which Newton’s Law of Universal Gravitation is not sophisticated enough to cope with.

Almost a century later, GRT remains the single most influential theory in modern physics, and one of the few that almost everyone, from all walks of life, has heard of (even if they may be a little hazy about the details). Einstein’s GRT predicted the existence of black holes many years before any evidence of such phenomena, even indirect evidence, was obtained. Yet another theoretical prediction of GRT is the existence of gravitational waves, perturbations or ripples in the fabric of space-time, caused by the motion of massive objects, that propagate throughout the universe as objects are squeezed on a subatomic scale. There has been good circumstantial evidence for these elusive waves since the 1970s, but it was only in late 2015 that gravitational waves were definitively observed at the twin Laser Interferometer Gravitational-wave Observatory (LIGO) detectors in the USA. This potentially opens up a whole new way of looking at the universe. On this line of thinking, something like the Einstein EP, worth mentioning, emerged in the early 17th century, when Galileo expressed experimentally that the acceleration of a test mass due to gravitation is independent of the amount of mass being accelerated. This is known as the “weak equivalence principle,” also known as the “universality of free fall” or the “Galilean equivalence principle.” The weak EP assumes falling bodies are bound by non-gravitational forces only (Wagner et al., 2012). The strong EP (Einstein equivalence principle) includes (astronomic) bodies with gravitational binding energy.

On 14 September 2015, the universe’s gravitational waves were observed for the very first time. The waves, which were predicted by Albert Einstein a hundred years ago, came from a collision between two black holes. It took 1.3 billion years for the waves to arrive at the LIGO detector in the USA. In 2017, Rainer Weiss, Barry C. Barish and Kip S. Thorne, were awarded the Nobel Prize in Physics “for decisive contributions to the LIGO detector and the observation of gravitational waves” (TNPP, 2017). It is also another indication of just how robust the GRT is. Gravitational waves spread at the speed of light, filling the universe, as Albert Einstein described in his GRT. They are always created when a mass accelerates, like when an ice-skater pirouettes or a pair of black holes rotate around each other. Einstein was convinced it would never be possible to measure them. The LIGO project’s achievement was possible by using a pair of gigantic laser interferometers to measure a change thousands of times smaller than an atomic nucleus, as the gravitational wave passed the Earth. Gravitational waves are direct testimony to disruptions in spacetime itself. This is something completely new and different, opening up unseen worlds. A wealth of discoveries awaits those who succeed in capturing the waves and interpreting their message.

4.3. Example #3: All matter has wave properties

The third example of transdisciplinary thinking comes from French physicist Louis de Broglie with the enunciation of his “wave-particle duality” (WPD) principle. In his 1924 PhD thesis he postulated the wave nature of electrons and suggested that all matter has wave properties. This concept is known as the “de Broglie hypothesis,” an example of WPD, and forms a central part of the theory of quantum mechanics (deBroglie, 1926). The 1925 pilot-wave model, and the wave-like behaviour of particles discovered by de Broglie was used by Erwin Schrödinger in his formulation of wave mechanics (Valentini, 1992). The pilot-wave model and interpretation was then abandoned in 1927, in favor of the quantum formalism, until 1952 when it was rediscovered and enhanced by David Bohm.

In theoretical physics, the pilot wave theory, also known as “Bohmian mechanics,” was the first known example of a hidden variable theory, presented by Louis de Broglie in 1927 (deBroglie, 1927). De Broglie won the Nobel Prize for Physics in 1929 (TNPP, 1929) “for his discovery of the wave nature of electrons”, after the wave-like behaviour of matter was first experimentally demonstrated in 1927 by the Davisson–Germer experiment (DGE, 2017). De Broglie’s transdisciplinary vision aggregated relational knowledge on energy, inertial mass, wave mechanics and dynamics in a unique new worldview.

In quantum mechanics, the Schrödinger equation is a mathematical equation that describes the changes over time of a physical system in which quantum effects, such as WPD, are significant. The equation is a mathematical formulation for studying quantum mechanical systems. It is considered a central result in the study of quantum systems and its derivation was a significant landmark in developing the theory of quantum mechanics. It was named after Erwin Schrödinger, who derived the equation in 1925 and published it in 1926 (Schrödinger, 1926). It formed the basis for his work that resulted in his being awarded the Nobel Prize in Physics in 1933 (TNPP, 1933), together with Paul Adrien Maurice Dirac “for the discovery of new productive forms of atomic theory.”

The de Broglie–Bohm theory, also known as the “pilot wave theory,” “Bohmian mechanics,” “Bohm’s interpretation,” and the “causal interpretation,” is a deterministic interpretation of quantum theory. In addition to a wavefunction on the space of all possible configurations, it also postulates an actual configuration that exists even when unobserved. The evolution over time of the configuration (that is, the positions of all particles or the configuration of all fields) is defined by the wave function by a “guiding equation.” The evolution of the wave function over time is given by Schrödinger’s equation. The theory is deterministic (Bohm, 1952) and explicitly nonlocal: the velocity of any one particle depends on the value of the guiding equation, which depends on the configuration of the system given by its wavefunction; the latter depends on the boundary conditions of the system, which in principle may be the entire universe. The theory results in a measurement formalism, analogous to thermodynamics for classical mechanics, that yields the standard quantum formalism generally associated with the Copenhagen interpretation. The theory’s explicit non-locality resolves the “measurement problem”, which is conventionally delegated to the topic of interpretations of quantum mechanics in the Copenhagen interpretation.

Scientist have uncovered a great similarity between fluid dynamics and the WPD of quantum mechanics. We should not take macroscopic analogy as proof of microscopic reality, but certainly it demonstrates that this sort of behavior does happen in this universe, at least on some scales. Furthermore, it suggests that the mathematics of quantum mechanics represents the physics of “time,” with classical physics representing long-term statistical behavior of processes over a period of time as in Newton’s differential equations. In 1831, English scientist Michael Faraday was the first to notice oscillations of one frequency being excited by forces of double the frequency, in the crispations (ruffled surface waves) observed in a wine glass excited to “sing” (Faraday, 1831). Faraday waves, also known as “Faraday ripples,” are nonlinear standing waves that appear on liquids enclosed by a vibrating receptacle. When the vibration frequency exceeds a critical value, the flat hydrostatic surface becomes unstable. This is known as the “Faraday instability.” Faraday described them in an appendix to an article in the Philosophical Transactions of the Royal Society of London in 1831 (Faraday, 1831). The Faraday wave and its wavelength at system macroscale level is analogous to the de Broglie wave with the de Broglie wavelength in quantum mechanics being at quantum level (Bush, 2010). In 1860 German physicist Franz E. Melde generated parametric oscillations in a string by employing a tuning fork to periodically vary the tension at twice the resonance frequency of the string (Melde, 1860). Parametric oscillation was first treated as a general phenomenon by Lord Rayleigh (Strutt, 1883, 1887).

Floating droplets on a vibrating bath were first described in writing by Jearl Walker in a 1978 article in Scientific American (Walker, 1978). In 2005 a French team of scientists, led by physicists Yves Couder and Emmanuel Fort, discovered that a millimetric droplet bouncing on the surface of a vibrating fluid bath can self-propel by virtue of a resonant interaction with its own wave field (Couder et al., 2005a, 2005b). This system represents the first known example of a pilot-wave system of the form envisaged by Louis de Broglie in his double-solution pilot-wave theory at system macroscale level (deBroglie, 1987; Milewski et al., 2015). Couder and associates set up an experiment of an oil-filled tray placed on a vibrating surface. When a drop of the same fluid is dropped onto the surface of the vibrating fluid, the droplet bounces on the surface of the fluid, rather than simply losing its shape upon impact. This bouncing droplet produces waves on the surface and these waves cause the droplet to move along the surface. This system was used to reproduce one of the most famous experiments in quantum mechanics called the double-slit experiment in 2006 (DSE, 2017). It is interesting that even if the bouncing droplet represents a whole atom surrounded by an electron probability cloud the atom can go through either slit with the probability cloud going through both forming an interference pattern. Subsequent experimental work by Couder, Fort, Protiere, Eddi, Sultan, Moukhtar, Rossi, Molacek, Bush and Sbitnev has established that bouncing drops exhibit single-slit and double-slit diffraction, tunnelling, quantised energy levels, Anderson localisation and the creation/annihilation of droplet/bubble pairs (Brady & Anderson, 2014).

Therefore, the bouncing droplet can represent the “particle” nature of a photon or electron and the waves on the surface of the fluid can represent the wave nature that can go through both slits at the same time. If we break the experiment of bouncing droplets down into individual parts and ask ourselves what each part could represent in the quantum world of the atoms it could give us a greater picture or understanding of quantum mechanics. In fluid dynamics, the double-slit experiment and the Copenhagen interpretation could be effectively represented where the fluid is the structure of spacetime and the quantum level is composed of little electromagnetic oscillators of the Planck size, also known as zero-point vacuum energy in quantum field theory (QFT). As a matter of fact, a walking droplet on a vibrating fluid bath was found to behave analogously to several different quantum mechanical systems, namely particle diffraction, quantum tunneling, quantized orbits, the Zeeman effect, and the quantum corral (Bush, 2017). It was also found that, quite similar to pilot wave theory, current hydrodynamic model incorporates the nonlocality which is a characteristic feature of hidden variable theories.

We know that the Universe is never at absolute zero therefore there is always the spontaneous absorption and emission of photon energy forming photon oscillation or vibrations. This process will naturally form the continuously vibrating surface of the tray in the experiment. We know that light has momentum and that momentum is frame dependent. Also we know that the light photon forms the movement of charge with the continuous flow of electromagnetic fields relative to the atoms of the periodic table. Therefore, the droplet can represent a photon, electron or an atom within its own reference frame and the waves can represent electromagnetic waves with charge being an innate part of all matter. Therefore, the structure of spacetime is not merely curved to produce gravity but as well curled, like water spinning down the drain. And this can take place in just three dimensions over a period of time without the extra dimensions of “String Theory” or the “parallel universes” of Hugh Everett “many worlds” interpretation.

5. Conclusion

To provide reliable anticipatory knowledge, system must produce predictions ahead of the predicted phenomena as fast as possible. Then, they have to be verified by a reality level comparison, to be validated and accepted, and then to be remembered as learned reliable predictions. This validation cycle (emulation) allows system tuning and adaptation to its environment automatically and continuously. We need to recall Arthur Koestler’s words (Koestler, 1959):

“The inertia of the human mind and its resistance to innovation are most clearly demonstrated not, as one might expect, by the ignorant mass--which is easily swayed once its imagination is caught--but by professionals with a vested interest in tradition and in the monopoly of learning. Innovation is a twofold threat to academic mediocrities: it endangers their oracular authority, and it evokes the deeper fear that their whole, laboriously constructed intellectual edifice might collapse. The academic backwoodsmen have been the curse of genius from Aristarchus…; they stretch, a solid and hostile phalanx of pedantic mediocrities, across the centuries.”

Current traditional formal systems are unable to capture enough information to model natural systems realistically. They cannot capture, represent and describe real system emergent properties effectively. It is time for an Ontological Uncertainty Management (OUM) system upgrade (Fiorini, 2017c).

References

- Ansoff, H. Igor. “Managing Strategic Surprise by Response to Weak Signals.” California Management Review XVIII, no. 2 (1975): 21–33.

- Austin, Derek. “The theory of integrative levels reconsidered as the basis for a general classification.” In

Classification and Information Control, edited by The Classification Research Group, 81-95. London: The Library Association, 1969. - Bohm, David.“A Suggested Interpretation of the Quantum Theory in Terms of ‚Hidden Variables’ I.” Physical Review 85, no. 2 (1952): 166–179.

- Brady, Robert and Anderson, Ross.“Why bouncing droplets are a pretty good model of quantum mechanics.” Arxiv, 2014. https://arxiv.org/abs/1401.4356

- Brody, T.A. “On Quantum Logic.” Foundation of Physics 14, no.5 (1984): 409-430.

- Bush, John W. M. “Quantum mechanics writ large.” Talks, 2010 http://www.tcm.phy.cam.ac.uk/~mdt26/tti_talks/deBB_10/bush_tti2010.pdf

- Bush, John W. M. “Hydrodynamic quantum analogs.” Research Page, 2017. http://math.mit.edu/~bush/?page_id=484

- Byers, William. Deep Thinking. Singapore: World Scientific, 2015.

- CIRET, International Center for Transdisciplinary Research, 2015. http://ciret-transdisciplinarity.org/index_en.php (materials in French, English, Portuguese and Spanish).

- Coghlan, Andrew. “Planet Earth makes its own water from scratch deep in the mantle.” News & Technology, New Scientist 233, no. 3111, 4 February 2017.

- Couder, Yves, Fort Emmanuel, Gautier C-H. and Boudaoud Arezki. “From bouncing to floating: noncoalescence of drops on a fluid bath.” Physics Review Letters 94 (2005a):177801.

- Couder, Yves, Protière Suzie, Fort Emmanuel and Boudaoud Arezki. “Walking and orbiting droplets.” Nature 437 (2005b):208.

- CRG, Classification Research Group. Classification and information control. London: Library association, 1969.

- Dahlberg, Ingetraut. Ontical structures and universal classification. Bangalore: Sarada Ranganathan endowment for library science, 1978.

- Dahlberg, Ingetraut. “Brief Communication: A Systematic New Lexicon of All Knowledge Fields based on the Information Coding Classification.” Knowledge Organization 39, no.2 (2012): 142-150, Würzburg, Germany: Ergon Verlag,

- de Broglie, Louis. Ondes et mouvements. Paris: Gautier-Villars, 1926.

- de Broglie, Louis. “La mécanique ondulatoire et la structure atomique de la matière et du rayonnement.” Journal de Physique et le Radium 8, no.5 (1927): 225–241.

- de Broglie, Louis. “Interpretation of quantum mechanics by the double solution theory.” Les Annales de la Fondation Louis de Broglie 12 (1987): 1–23.

- Deely, John. Four ages of understanding. The first postmodern survey of philosophy from ancient times to the turn of the Twenty-first century. Torontopoli: University of Toronto Press, 2001.

- DGE, Davisson–Germer Experiment. Wikipedia, 2017. https://en.wikipedia.org/wiki/Davisson%E2%80%93Germer_experiment

- Dorfles, Gillo. Horror Pleni. La (in)civiltà del rumore. Roma, IT: Castelvecchi, 2008.

- DSE. “Double-Slit Experiment.” Wikipedia, 2017. https://en.wikipedia.org/wiki/Double-slit_experiment

- Eco, Umberto. A Theory of Semiotics. Macmillan: London, 1976.

- Espejo Raul and Reyes Alfonso. Organizational Systems: Managing Complexity with the Viable System Model. Heidelberg:Springer, 2011.

- Faraday, Michael. “On a peculiar class of acoustical figures; and on certain forms assumed by a group of particles upon vibrating elastic surfaces.” Philosophical Transactions of the Royal Society (London) 121 (1831): 299–318.

- Feibleman, James K. “The integrative levels in nature.” British journal for the philosophy of science 5, no.17 (1954): 59-66. Also in Focus on in-formation, edited by Barbara Kyle, 27-41. London: Aslib, 1965.

- Fiorini, R.odolfo A. “How random is your tomographic noise? A number theoretic transform (NTT) approach.” Fundamenta Informaticae 135, nos. 1-2 (2014): 135-170.

- Fiorini, Rodolfo. A. “Computerized tomography noise reduction by CICT optimized exponential cyclic sequences (OECS) co-domain.” Fundamenta Informaticae 141 (2015): 115–134.

- Fiorini, Rodolfo A. “New CICT framework for deep learning and deep thinking Application.” International Journal of Software Science and Computational Intelligence 8, no. 2 (2016): 1-21.